之前立过一个 Flag 要用 Rust WebAssembly 来实现视频实时滤镜效果,今天来完成一下。

I set a flag previously, which is to implement video filter effect in real time by Rust WebAssembly. Now let’s complete it.

首先,我们按照 Rust and WebAssembly 的教程先搭建起我们的开发环境。

Firstly, we can setup the development environment according to Rust and WebAssembly.

查阅 wasm-bindgen 文档后发现,可以直接将 CanvasRenderingContext2d 从 JS 传递到 WASM:

After reading wasm-bindgen document, we found the CanvasRenderingContext2d object can be passed from JS to WASM:

1 | import('./pkg') |

1 | use web_sys::{CanvasRenderingContext2d, ImageData}; |

这样实现起来就跟 JS 很相似了:

So, the implementation is very similar to JS:

1 |

|

对比 JS 和 Rust WASM 的结果,发现 Rust WASM 的性能要更好一些:

Comparing the JS and Rust WASM, we found that Rust WASM performs better:

当然,我们也可以继续参考之前 Golang 的方法,通过在 JS 和 WASM 之间共享内存来实现。通过搜索,我们可以找到这个例子:

Of course, we can still refer to the previous Golang approach by sharing memory between JS and WASM. We can find an example:

1 | use std::ptr; |

1 | import { |

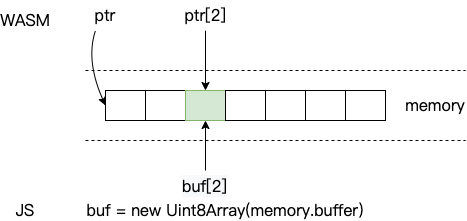

上述代码可以用下图来进行理解:

The following figure explains the code above:

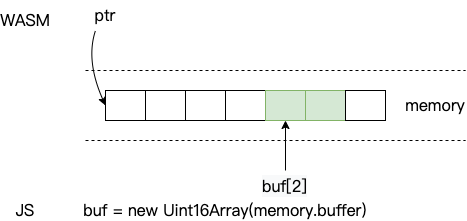

在 WASM 中可以通过指针偏移操作来访问或修改共享内存,而在 JS 中由于 memory.buffer 是 ArrayBuffer 类型,不能直接操作,需要创建“类型化数组对象”如 Uint8Array 来读写其内容。除了 Uint8Array 外,还有 Uint16Array 等其他类型,更多内容可以参考 MDN。他们的不同之处主要在于数组元素的取值范围以及所占用的字节数不同,比如上面的例子如果换成 Uint16Array 就会像这样:

In WASM, you can access or modify the shared memory through the pointer offset. But in JS, as memory.buffer is ArrayBuffer type, you cannot directly manipulate the contents of it. Instead, you need to create one of the “typed array objects” like Uint8Array to read and write the contents of the buffer. In addition to Uint8Array, there are other types such as Uint16Array. Refer to MDN for more information. They differ mainly in the range of array elements’ value and the number of bytes every element takes up. For example, if we replace the type by ‘Uint16Array’, the figure will be like this:

所以,通过共享内存,我们可以把图像数据从 JS 同步到 WASM,然后在 WASM 中修改共享内存的值,最后从共享内存中读出更新后的结果。代码大概长这样:

So, by shared memory, we can synchronize image data from JS to WASM, then modify the shared memory value in WASM, and finally read the updated result from the shared memory. The code will be like this:

1 |

|

1 | import {return_pointer, filter_shared_mem} from 'rust-filter/rust_filter' |

结果发现,共享内存的性能相比直接传递 CanvasRenderingContext2d 还要好一些:

It turns out that shared memory approach performs better: